Abstract

PACS Data Migrations generally need to complete as soon as possible. Performance tuning PACS migrations is needed to achieve that. We summarise findings from our experience performance tuning PACS migrations from a variety of PACS vendors in use throughout the NHS.

We consider in detail two principles for Data Migration speed-up. These principles are

- reducing migration dead time

- reducing total migration work

The findings are from using Cypher IT’s PACS data migration service to migrate to 3rd party destination VNAs and PACS systems, as well as migrating to their own exoPACS VNA (vendor neutral archive).

Findings

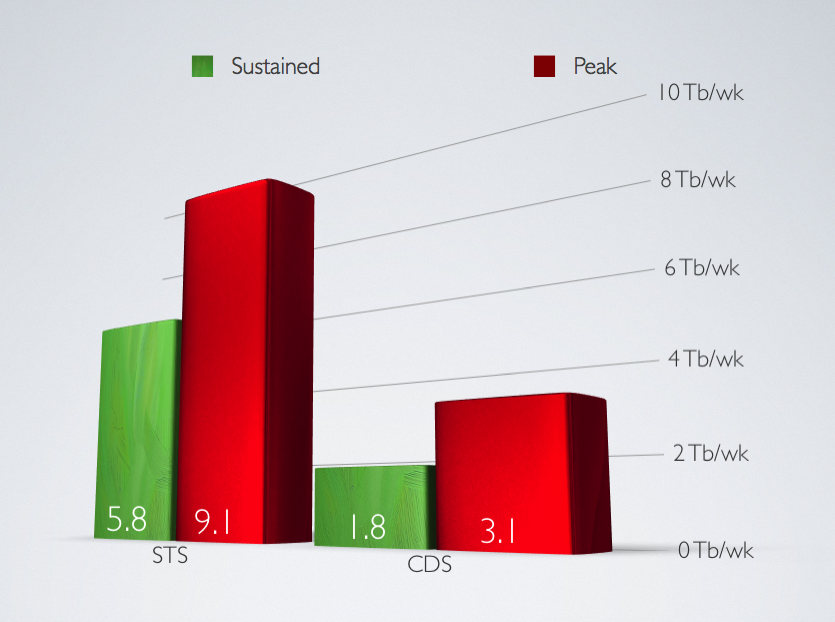

Data migration via standard DICOM services can achieve very high overall throughput by tuning for ‘dead time’ and ‘total work’, staying consistently at 60% of peak transfer rate.

Sustained throughput of 5.8Tb/week for studies already on the local PACS with a peak throughput of 9.1Tb/week is achievable with these methods. And 1.8Tb/week for studies migrated from Cloud storage, with a peak transfer rate of 3.1Tb/week from the cloud.

This demonstrates that

- Specialist Data Migration tools using standard DICOM services are fit for purpose

- Hospitals can avoid problems due to data migration taking too long

The throughput is based on average study size of 35Mb in the UK’s ‘Southern Cluster’ from Connecting for Health. Individual sites’ transfer rates may vary. The main factors are network bandwidth, number/performance of DICOM devices available on PACS, and specialist data migration tuning.

Outline of PACS Migration Process

Cypher IT’s Continuous Data Migration (CDM) Tool queries PACS and migrates studies using DICOM C-MOVE to a DICOM listener called a Migration listener. The query is generally by date range and study location on PACS – either on local store or on cloud storage. The query is performed on one or more DICOM devices, rather than on the PACS database directly. For Agfa this DICOM device is called a Workflow Manager or WFM; for GE this DICOM device is called a DAS; for Sectra it is called an Image Server/XD.

The Migration Listener receives the studies, applies any tag morphing rules, and then migrates (C-MOVEs) to the destination archive, usually the new VNA or new PACS. Migration Listeners also report to the CDM Tool with visibility of source and destination performance, so we can throttle dynamically to achieve maximum overall throughput. Finally, the CDM Tool will validate what has been successfully received by the destination, and repeat as required for studies that did not fully arrive on the destination for whatever reason.

Reducing PACS Migration ‘Dead Time’

‘Dead time’ is any time that the migration is not running. For example on some PACS the DICOM devices can lock up and need a reboot. When this was experienced in the evening, it would be many hours before a support call would work through to conclusion and that device be made usable again. Naturally, the ability to save many hours dead time makes a huge impact on overall migration time.

The mitigation to reduce these lock-ups, as well as to allow time for the receiving PACS to ‘catch up’, is to throttle back the transfer rate. For each DICOM device, we found tuning combinations of the below settings had the greatest effect on overall throughput.

- Maximum concurrent DICOM retrieves

- Minimum concurrency before starting additional DICOM retrieves

- Study size-dependent delay before starting each additional retrieve

We tried a regular break in DICOM retrieves (for example 10 minutes every hour) which was recommended by Connecting for Health, but found this sub-optimal as it results in excessive dead time when many smaller studies are transferred (eg weekend data). It is also insufficient catch-up time for the receiving PACS when many larger studies are transferred.

Thus a study-size-dependent delay between each retrieve is required to properly optimise overall migration speed, as well as to ensure live clinical systems are not affected by the migration.

Reducing PACS Migration Total Work

‘Work’ here means the computer effort & resources moving studies around. Consider that PACS that have been running for any reasonable time will have a full* local store, with older studies being on ‘nearterm’ slower storage such as jukeboxes or cloud storage.

Studies are duplicated onto cloud storage as they arrive on PACS, and newly acquired studies will push the oldest studies off the local store. The same is true for migrated data – an old study brought back from cloud storage comes into local storage before onward migration. This in turn pushes the oldest study off the local store. (When studies are ‘pushed off’, ie deleted from the local store, they are done in batches, down to a certain low-water mark. But a simplified concept of pushing off, study-by-study, is sufficient for our purposes.)

If not managed correctly, this can hugely increase the total work performed. Consider the following example to illustrate total work:

Example:

- Imagine it is 2013. PACS has data from 2007 to today. The local store has about 1.5 years’ worth of data, so it holds 2012 and 2013 data. Migrating oldest first (2007 forwards), will push 2012 data off the local store in order to migrate 2007 data. When the migration eventually gets to 2012 data, the PACS will retrieve these from Cloud storage to local storage once again, in order to migrate them. So ‘work’, or effort moving studies around, is greater than if the 2012 data was migrated first. Reducing total work avoids this issue.

We avoid the above issue by migrating the oldest local studies first – in our example 2012 forwards – before migrating cloud-only studies – in our example 2007 forwards. Given that moving studies from Cloud to local storage is generally the tightest bottleneck of the whole process (ie the slowest point), reducing the total work performed by PACS provides a significant decrease in the total time required for migration.

Ensuring Recent Studies stay local & online

PACS keep the most recently accessed studies online on the local store. Normally this works well, but a migration can skew thing. For example, old studies migrated yesterday are more ‘recent’ than studies taken 2 days. We know that the 2-day old study is more clinically relevant than the 2012 study migrated 1 day ago, but the PACS algorithm doesn’t know that. Ultimately this can mean the new study is ‘pushed off’ the local store by migrated studies.

It we allow clinically relevant studies could be pushed off the local store by the migration, then as well as interrupting and slowing clinical work, this would also increase the to-ing and fro-ing of recently acquired studies between local storage and cloud storage. Bandwidth and PACS resources used for this additional work should instead be applied to the data migration.

We therefore therefore ‘re-date’ recently acquired studies to ensure they are not pushed off local storage by the migration. This further reduces total work performed and is important for optimising overall migration speed, as well as not impacting live clinical systems.

To ensure vendor independence, Cypher IT re-date using standard DICOM services rather than vendor-specific script. This means that all sites can benefit from re-dating irrespective of their PACS vendor.

*Local storage is often only used up to a certain high-water mark, but for our purposes we can simplify the concept and consider this being ‘full’.

This was first published as an ePoster at UKRC 2013. It has been edited from the original for jargon and readability.

Call us – click here for details – or enter your details below to request a no-obligation quotation